User Acceptance Testing (UAT) Schedule

We include this editable document in the Proposal Kit Professional. Order and download it for $199. Follow these steps to get started.

DOWNLOADABLE, ONE-TIME COST, NO SUBSCRIPTION FEES

DOWNLOADABLE, ONE-TIME COST, NO SUBSCRIPTION FEES

Key Takeaways

- One-time License, No Subscriptions: Pay once and use Proposal Kit forever-no monthly fees or per-use charges.

- Built for Business Projects: Start with a proven User Acceptance Testing (UAT) Schedule and tailor sections, fields, and branding for your day-to-day project work.

- Instant Access: Download immediately and open the document right away-no waiting, no onboarding delay.

- Project-Ready Structure: Use a ready, professional layout for real-world project tasks (checklists, forms, analysis pages) so teams can execute consistently.

- Fully Editable in Word: Edit everything in Microsoft Word-swap text, add/remove sections, and apply your logo/colors without special skills.

- Step-by-Step Wizard Help: The Proposal Pack Wizard guides you with training/help and keeps you moving-no getting stuck on formatting or assembly steps.

- Wizard Data Merge & Project Management: Let the Wizard manage projects and merge recurring data (company/client names, addresses, dates) across your project documents.

- Included in Proposal Kit Professional: Available exclusively in the Proposal Kit Professional bundle.

What Our Clients Say

What Our Clients SayI’ve found Proposal Kit to be very useful over the years, I’ve been using it since 2004 and have found a bundle of useful information and guidance in the kit. Hopefully I will use it for years to come."

1. Get Proposal Kit Professional that includes this business document.

We include this User Acceptance Testing (UAT) Schedule in an editable format that you can customize for your needs.

2. Download and install after ordering.

Once you have ordered and downloaded your Proposal Kit Professional, you will have all the content you need to get started with your project management.

3. Customize the project template with your information.

You can customize the project document as much as you need. You can also use the included Wizard software to automate name/address data merging.

Use cases for this document

SilverPine Credit Union validates enterprise search before an audit window

The Challenge

UAT lead Maya Chen faces an audit deadline while business users struggle to find contracts, emails, and redacted images; the bank needs business acceptance testing of metadata, fuzzy, wildcard, stemming, taxonomy, and date-range searches with clear UAT test cases, UAT acceptance criteria, and a UAT separate from the production environment.

The Solution

She aligns UAT project stakeholders in a kick-off, defines uat scope and uat design step-by-step scripts, and sets up uat dashboards to track pass/fail and defect aging; Proposal Kit supports the effort by assembling the internal proposal, governance brief, and status report templates, while the AI Writer produces a training guide and a summary report, the RFP Analyzer compares lightweight uat tools, and line-item quoting estimates external triage hours.

The Implementation

Testers execute real-world scenarios, capture UAT feedback and UAT test evidence, and perform UAT defects retesting until regression testing is clean. Proposal Kit streamlines weekly updates and lessons-learned documents so executives can view progress and approve additional vendor hours with transparent line items.

The Outcome

Stakeholders grant UAT sign-off after all acceptance criteria are met, search accuracy improves, and the bank proceeds to go live, confident that auditors can retrieve the right versions and redactions without risk.

Veridian eHealth streamlines clinical content retrieval across study sites

The Challenge

Product owner Dr. Luis Romero must ensure study site teams can locate protocol amendments and consent forms quickly, align with ePRO consortium expectations, and keep the development, test, and UAT hosting environments isolated from the production environment.

The Solution

He drafts UAT test scenarios, test data, and roles and responsibilities for UAT acceptance testing, mixing scripted and exploratory testing; the Proposal Kit is used to create a data management review plan, a UAT training plan, and stakeholder briefings with AI Writer, while the RFP Analyzer compares hosting options and line-item quoting clarifies training and retest costs.

The Implementation

UAT real users execute searches on provisioned and bring-your-own devices, log UAT defects with severity and priority, and cycle through UAT retesting; real-time dashboards surface bottlenecks while Proposal Kit compiles concise progress reports and a closure memo for sponsors.

The Outcome

User adoption rises, traceability from requirements to outcomes is clear, stakeholders sign off after exit criteria are met, and the team distinguishes UAT vs beta testing to plan a controlled release.

NorthBridge Builders secures findability for bids and invoices during a platform migration

The Challenge

Operations director Jasmine Ortiz leads a migration where project managers need fast access to "Bids" and Accounts Payable records; past efforts blurred UAT vs system testing and led to scope creep and missed timelines.

The Solution

She adopts a risk-based UAT with design mapping, precise UAT test case steps, and business process owners paired with testers. The Proposal Kit produces the change-impact brief, communications plan, and post-go-live release plan. AI Writer drafts executive updates, the RFP Analyzer evaluates OCR add-ons, and line-item quoting models remediation budgets.

The Implementation

Teams run end-to-end searches, verify sorting and native viewing, consolidate defect logs, and complete UAT defects retesting until pass criteria are met; UAT dashboards highlight progress, and Proposal Kit automates document assembly for weekly stakeholder reviews.

The Outcome

The business grants UAT signoff, content retrieval time drops, and the company transitions to production with clear documentation, measurable business impact, and high confidence in search usability.

Abstract

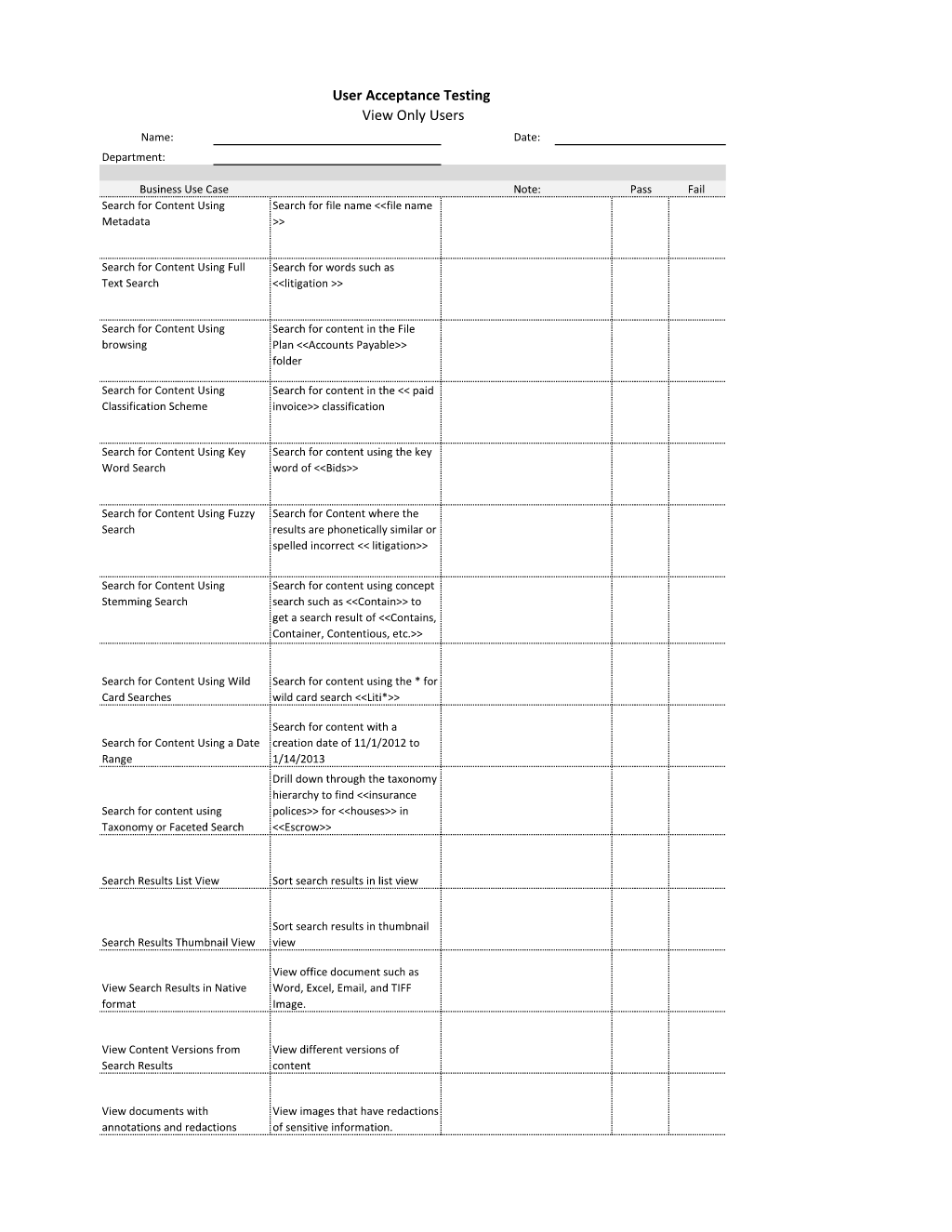

This document defines a business-focused acceptance test of enterprise search and retrieval capabilities in a content management system. It reads as a concise UAT plan that maps business requirements to clear test situations and test cases. The UAT scope centers on how end users locate, view, and manage records: metadata search by file name, full-text search for terms such as litigation, browsing a File Plan (e.g., Accounts Payable), classification-based queries (paid invoice), keyword queries (Bids), fuzzy search, stemming, wildcard searches (Liti*), and date-range filters. It also verifies taxonomy/faceted navigation to narrow to insurance policies for houses in escrow. Result handling is in scope: sorting in list and thumbnail views, opening native formats (Word, Excel, email, TIFF), viewing prior versions (a practical time-travel mechanism), and confirming annotations and redactions display correctly.

A practical UAT test plan would define UAT prerequisites, UAT environment separate from production, and UAT participants (business users and UAT testers). Roles and responsibilities should include business process owners for business objective confirmation and requirements approval. UAT stages-planning, execution, and closeout-benefit from a UAT checklist, UAT readiness checklist, and UAT test plan template. Test scripts and test case steps should specify test data, the name of the tester, expected outcomes, pass/fail outcomes, and UAT acceptance criteria such as accuracy, permissions, and performance across end-to-end business flows.

During the UAT execution phase, teams capture UAT evidence, maintain a UAT findings log and defect logs, and drive defect management with defect severity and priority. Defect tracking, defect capture, and retesting support defect resolution. Progress reporting with dashboard visibility helps stakeholders monitor the UAT timeline, UAT execution defects, and risks.

UAT exit criteria and UAT exit sign-off culminate in UAT sign-off approval by the sponsor or designee, confirming readiness to go live and informing any post-go-live release plan. As UAT best practices, avoid scope creep, do not repeat system testing; distinguish UAT vs system testing, UAT vs integration testing, and UAT vs usability testing. Include compliance acceptance testing, operational acceptance testing, and business acceptance testing where appropriate.

Use cases span legal teams vetting litigation searches, finance verifying Accounts Payable retrievals, real estate validating escrow taxonomies, and regulated clinical study archives in an eCOA system across bring your own device and provisioned devices in development, test, and production environments.

Proposal Kit can streamline this effort with document assembly for a UAT project plan, automated line-item quoting for services, an AI Writer to build supporting documents, and an extensive template library for checklists, matrices, and reports, helping teams produce thorough UAT documentation with ease.

To deepen the picture, consider how a disciplined UAT testing process runs from the UAT phases, planning, execution, and closeout. A structured UAT kick-off or UAT kick-off meeting sets UAT governance, UAT roles and responsibilities, UAT stakeholders, and UAT entry criteria. Teams complete knowledge gathering and scope definition, producing a requirements document and, where applicable, a study protocol.

UAT design and UAT design mapping translate UAT user stories and business requirements into UAT test situations and UAT test cases with clear UAT test case steps. Test management emphasizes traceability and UAT matrix traceability, linking each step to acceptance criteria. Test data preparation yields reliable UAT test data, while UAT testers' training and UAT training cover tools, UAT recorder use, and UAT checklist best practices.

During execution, use a UAT separate environment within the organization's development, test, and production strategy: development environment for builds, test environment for system testing and integration testing, and a controlled UAT hosting environment separate from production, with regression testing supporting end-to-end testing of real-world situations. Encourage exploratory testing alongside scripted tests to surface usability issues. Defect reporting captures UAT defects with defect severity priority in defect logs consolidated for UAT retesting and UAT bug fixes.

UAT monitoring dashboards and real-time dashboards provide progress visibility on the project timeline, UAT outcomes, UAT pass fail, and UAT pass criteria. Collect UAT feedback via a UAT feedback form, apply a UAT risk-based approach for business impact, and maintain UAT automation only where appropriate (e.g., workflow automation for data loads, automated documentation of UAT test evidence).

Evaluation and closeout rely on a UAT summary report, UAT evaluation against UAT acceptance criteria, and UAT exit criteria sign-off. Ensure UAT sign-off communication and stakeholders sign-off; UAT sign-off confirms readiness for the production environment. Address UAT challenges and UAT mistakes, such as UAT scope creep, and do UAT too early, and distinguish UAT vs beta testing, alpha testing, unit testing, regression testing, and other acceptance testing types like contract acceptance testing to support user adoption.

Regulated teams can fold in data management review plan, study site monitoring, and EPRO consortium guidance, keeping UAT business processes business process-centric and driven by UAT real users.

Proposal Kit helps author the supporting UAT documentation with ease, including UAT scope definition, UAT test management narratives, UAT test plan artifacts, UAT sign-off process and UAT exit signoff pages, UAT approval records, UAT feedback forms, and matrix traceability sheets. Its document assembly, automated line-item quoting, AI Writer, and broad template library speed creation of consistent, professional UAT deliverables.

Expanding on the search-focused evaluation, teams benefit from a UAT step-by-step walkthrough for each search mode. For example, a tester selects metadata search, enters a file name, applies a date range, verifies results sort order, opens a native document, reviews prior versions, and records expected versus actual outcomes. This explicit sequence highlights the importance of UAT documentation because precise notes, screenshots, and UAT test evidence make defects reproducible and accelerate UAT defect retesting after fixes.

Position this work as UAT acceptance testing rather than technical checks. Business users validate that real queries, fuzzy search for misspellings, stemming for related terms, and taxonomy filters support decision making. Use lightweight UAT tools for test capture and time-stamped notes, and standardize UAT dashboards to show test throughput, pass/fail counts, and defect aging. Clear dashboards keep UAT project stakeholders aligned on risk and progress.

Establish UAT hosting environments that are separate from production with realistic data and permissions. This isolation safeguards records and mirrors business processes while preventing unintended changes in live systems. Document the promotion path so that fixes verified in UAT move into the production environment in a controlled release.

Foster UAT collaboration by pairing business process owners with testers during scenario dry-runs, then scheduling focused sessions for UAT defects retesting. Encourage short feedback cycles and daily syncs to confirm whether search-result accuracy, redaction visibility, and version history behavior meet expectations. Tie each observation to a requirement ID and record it in the summary so stakeholders can trace outcomes to commitments.

Finally, define ownership for communications: who publishes the daily UAT dashboards, who approves readiness, and how signoff is captured. A disciplined handoff ensures the organization enters go-live with confidence that users can find, sort, and view content as intended.

How do you write a User Acceptance Testing (UAT) Schedule document?

business,project,records,management,toolkit,information,documents,templates,boilerplate,form,outline,textThe editable User Acceptance Testing (UAT) Schedule document - complete with the actual formatting and layout is available in the retail Proposal Kit Professional.

20% Off Discount

4.7 stars, based on 849 reviews

4.7 stars, based on 849 reviewsRelated Documents

- Records Inventory Worksheet Questionnaire for Business Units

- Records Management Pre-Inventory Survey

- Records Survey and Inventory Analysis

- Business Case for Records Management

- Records Management Program Analysis Document

- Records Management Policies and Procedures

- Physical Content Management Box Label

- Records Access Security Plan

- Records Access Security Plan (Expanded)

- Records Management Metadata Model

- Records Management Governance Plan

- Records Management Topic Template

- Records Management Taxonomy Topic Template

- Records Management File Plan Template

- Records Management Expanded File Plan Template

- Application for Records Retention Form

- Authorization for Records Destruction Form

- Formal Record Hold Investigation Form

- Formal Record Hold Notice Form

- Release of Legal Hold Notice Form

- Record Retention Schedule Change Form

- Legal Hold and Discovery Log

- Records Disposition Log

- Stakeholder Concerns Log

- Communication Plan

- RACI Matrix Worksheet

- Risk Mitigation Plan

- Records Inventory Worksheet

- Records Management Return On Investment Calculator

- User Acceptance Testing (UAT) Enhancements and Bug Tracker

Ian Lauder has been helping businesses write their proposals and contracts for two decades. Ian is the owner and founder of Proposal Kit, one of the original sources of business proposal and contract software products started in 1997.

Ian Lauder has been helping businesses write their proposals and contracts for two decades. Ian is the owner and founder of Proposal Kit, one of the original sources of business proposal and contract software products started in 1997.By Ian Lauder

Published by Proposal Kit, Inc.

Published by Proposal Kit, Inc.We include a library of documents you can use based on your needs. All projects are different and have different needs and goals. Pick the documents from our collection, such as the User Acceptance Testing (UAT) Schedule, and use them as needed for your project.

Cart

Cart

Get 20% off ordering today:

Get 20% off ordering today:  Facebook

Facebook YouTube

YouTube Bluesky

Bluesky Search Site

Search Site